We are working on a series of case studies to share practices of using Generative AI in Learning and Teaching Activities.

In this series of blogposts, colleagues who are using Generative AI in their teaching, will share how they went about designing these activities.

We’re delighted to welcome Dr Gareth Hoskins (tgh@aber.ac.uk) from DGES in this blogpost.

Case Study # 3: Classroom evaluation of Generative AI in the Department of Geography and Earth Sciences

What is the activity?

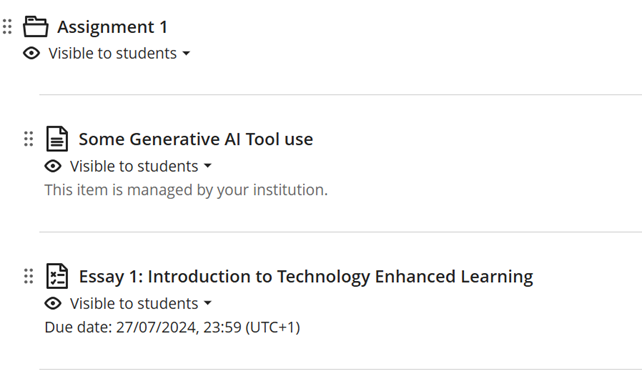

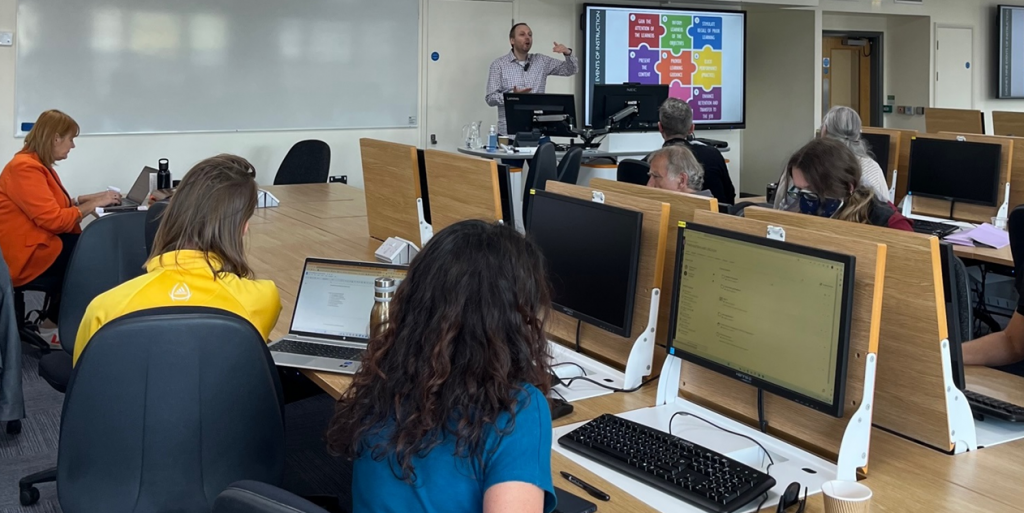

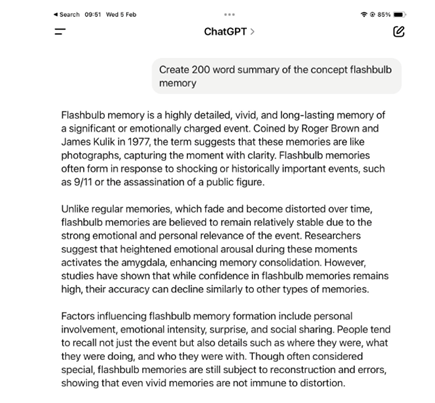

This was a classroom evaluation of an AI-generated summary of the scientific concept ‘flashbulb memory’ as part of a lecture on ‘individual memory’ in the 3rd year human geography/sociology module GS37920 Memory Cultures: heritage, identity and power.

I prompted ChatGPT with the instruction: “Create a 200-word summary of the concept of flashbulb memory”, created a screengrab of the resulting text and embedded this within my lecture slides giving the class 3 minutes to read it and discuss it on their tables asking specifically for responses to the questions:

- What biases does the content create?

- Whose interests are served?

- Where are the sources coming from?

What were the outcomes of the activity?

Discussion didn’t touch too much on the questions I posed but focused more on the ChatGPT content where students were much more critical of the content than I had anticipated. They noted the dull tone, the repetition, uncertainty surrounding facts presented the vague approach and general lack of specificity. Those students showed a surprising degree of GenAI literacy which was conveyed to the class as a whole. During the discussion, the students became more aware of the utility of GenAI tools, more comfortable speaking about how they use it and might go on to use it, and how its limitations and weaknesses might affect the content it generates.

I developed the exercise using UCL guidance webpage ‘Designing Assessments for an AI-enabled world’ https://www.ucl.ac.uk/teaching-learning/generative-ai-hub/designing-assessments-ai-enabled-world and re-designed my exam questions on the module to remove generic appraisals of famous academics’ contributions to various disciplinary debates and substitute with hypothetical scenario-based questions that were much more applied.

How was the activity introduced to the students?

My intension was to acknowledge that we exist in an AI-enabled world which creates opportunities but also problems for learning. I also used the exercise to introduce the risks relating to assessment and outline my own strategy for assessing on this module using real-life problem-based seen-exam questions requiring use of higher-level skills of evaluation and critical thinking applied to “module-only” content and recent academic publications which GenAI essay-writing tools struggle to access.

How did it help with their learning?

The activity helped students become more familiar with the use GenAI as a “research assistant” (for creating outlines and locating sources) and created an environment for open discussion about the limitations of AI-generated content in terms of vagueness, hallucination, lack of understanding, and lack of access to in-house module content on Blackboard or up-to-date research (articles published in the last two years).

How will you develop this activity in the future?

I would flag other systems including DeepSeek, Gemini, Microsoft Co-Pilot and Claude AI as well as discuss their origins, pros and cons, and crucially caution about environmental and intellectual property consequences.

Keep a lookout for our next blogpost on Generative AI in Learning and Teaching case studies.